LLM has changed our views on technology and our very idea of what a language is in the last two years. Entering 2024, December, to follow a mysticism that defied knowledge of the models' abilities, mechanisms behind them, and how they are geared to solve problems, we'll plunge deeper with this elaborate and complete guide into LLMs—evolution, uses, and future prospects.

What Is a Large Language Model?

They are Artificial Intelligence systems that learn to understand human text and generate it like humans from a huge textual database. That is what the deep learning nature of the modern neural network enables for LLM to predict the next word in a sentence and create coherent paragraphs, sometimes even stimulating conversations to some extent. Other examples of the more popular ones include OpenAI's GPT-3 and GPT-4, Google BERT, and the Meta Llama family.

Understanding the LLM Large Language Models(LLM) With Examples

It all began with simple neural network models, but some of the major breaking milestones for LLM are:

RNN and LSTM:

For all their early success, these all gave rise to a new class of recurrent neural networks and Long Short-Term Memories that maintained context through hidden states when processing sequential data. However, they too had problems with long-distance dependencies.

Well, Vaswani came up with the concept of transformers way back in 2017.

They removed this bottleneck from the sequences, further providing convertible cluster calculations that, in principle, could be supported in acceleration by processors. It is in these transformers, with their self-attention mechanisms, that the importance of every word is weighed against the others in the sentence.

BERT, 2018:

This affects pre-training large corpora in NLP and fine-tuning tasks. Due to bi-directional training, BERT can contextualize relating events to previous and following words.

GPT-3, 2020:

GPT-3 has 175 billion parameters, so it's definitely the most supercomputer model in generating text without competition. It generates human text and can answer questions; it can even write code snippets.

GPT-4 and Beyond -

This extends the models to be larger in scale and capacity, which can further improve model performance. Otherwise stated, GPT-4 is going to have many more parameters and tuning procedures that would then contribute to high performance on different kinds of tasks and general achievement in smooth environments.

GPT-4o Mini: -

This model is the most cost-efficient small model of all OpenAI models. GPT-4o mini scores 82% on MMLU datasets. GPT-4o mini will offer broad applicability, low cost, and latency for applications chaining or parallelizing large numbers of model calls. This especially concerns applications that pass a large volume of context to the model, like source code repositories or conversation history, and those providing real-time text responses to customers, such as customer support chatbots.

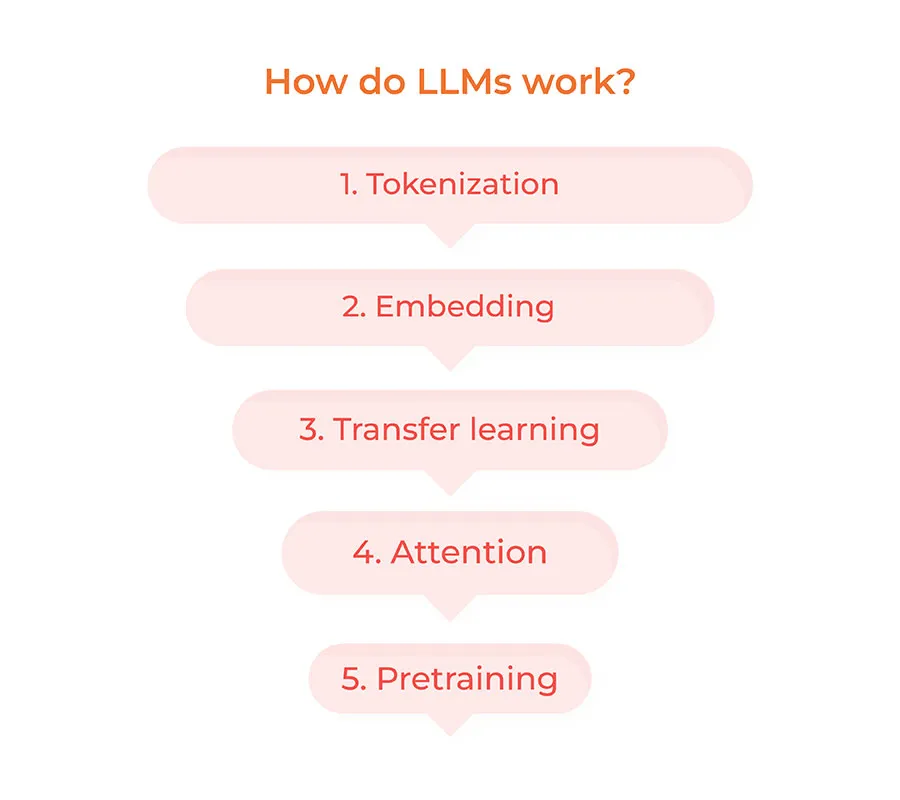

How Does the Large Language Model Work?

LLMs are just a more complex variant of the transformer architecture, and right now they have been using what's called self-attention mechanisms, which determine how relevant every word in a sentence is compared to all the others. Following is a grossly simplified explanation of how they work:

Tokenization

This is a process whereby input text will be broken down into substring elements called tokens. They can be words, sub-words, or even single characters. Embedding refers to how number vectors capture semantic meaning for each token. This is what gets learned during training, and hence it learns all the different relationships between words.

Attention Mechanism

The Attention Mechanism thus derives additional contexts and meanings from relationships built across tokens through the self-attention layers. This will, therefore, enable a model to focus on the relevant parts of the input while making a prediction.

A Feedforward Neural Network

A feedforward neural network Processes these vectors, weighted by these attention weights, to make a prediction. It goes through successive layers of transformations as it refines this understanding concerning the input.

Output Generation

The next token, word, or even sentence is inferred by learning patterns. Even larger chunks of text can be generated through iterative repetition.

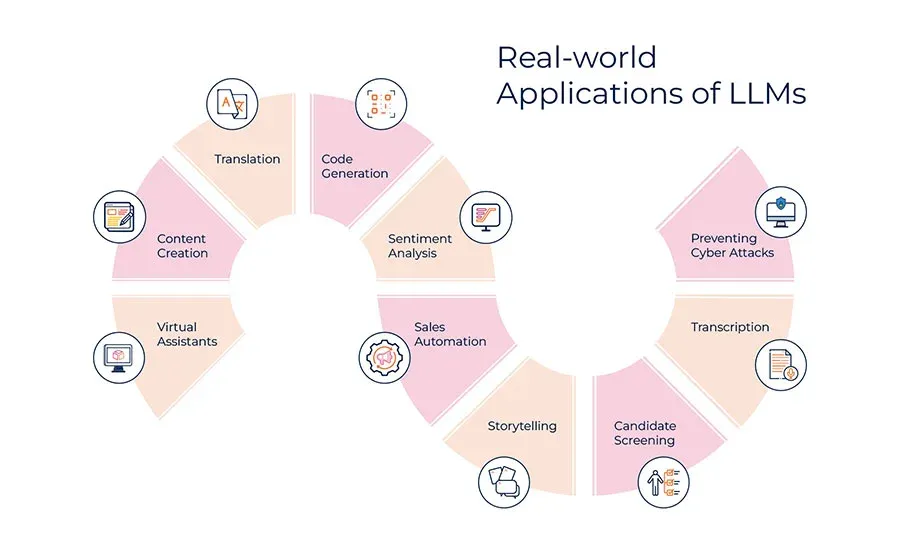

Let’s Check Some LLM Applications

LLMs can have many more applications in many domains: -

Content Generation:

Generative Articles, Blogs, and Creative Writing. For example, GPT-3 is used to write poetry, stories, and technical documentation.

Customer Support:

Triggers chatbots and virtual assistants, so more sophisticated LLMs that can handle complex queries with relevant information will lead to better customer satisfaction.

Translation:

Very accurate translation of source text into another language LLM would understand the nuance and context much better and hence can come up with more accurate translations than traditional methods.

Code Generation:

It should assist during code development while one is writing and debugging. Sometimes tools like these would be a prescription of lines of code – whole functions if necessary – based on context, such as GitHub Copilot.

Medical Chatbots:

This gives out the medical diagnosis and contacts between doctors and patients. An LLM can review various documents concerning a patient's case to give probable diagnoses and inform patients about what they are faced with and the available treatments.

Ethical Concerns

LLMs are very promising but also raise severe concerns that seem ethical and professional. Bias contained in a training dataset can be inherited into an LLM and produce unfair or discriminatory results. One of the bigger challenges in the ethical deployment of AI models is how to control bias. Research is underway in which techniques are being investigated for methodology identifying and mitigating bias in both training data and model outputs.

LLM Misinformation:

LLM may provide information that, though plausible, is incorrect. In doing so, this further confuses the fact-checking tasks. There are fears about what it portends for the spread of misinformation and the implications for society.

Privacy:

Most sensitive data requires extreme levels of privacy to avoid any instances of misuse. Thus, organizations will develop very robust practices regarding data protection while keeping adherence to regulations like the GDPR.

Environmental Impact:

Huge models require huge computational resources, which have an environmental impact. Efforts are at hand to develop the most effective training methods that reduce the carbon footprint of AI research.

Large Language Models(LLMs) in 2024 and Beyond

Some of these pipeline-level changes will add to a wide picture for future Large Language Models(LLMs) – specifically in a subfield like the one below by 2024. Better training methods:

1 Sparse color mechanism: Sparse attention and model pruning mechanisms have already started drastic changes at the computational cost of LLM training. It would manifest in knowledge distillation into smaller models that could be trained without losing performance.

2 Continuous Learning: Continuous learning causes models to evolve and change. This approach will help update the LLM regularly without the need to complete the process backward. Improved construction industry.

3 Better hardware: With the development of TPU and other construction intelligence chips, the long-term development of hardware will continue. These advances will lead to better training and consideration of great examples. Although still in development, quantum computing will be exponentially faster for certain types of calculations. Successful integration can transform LLM education and thinking with the help of quantum technology.

4 Modular and hybrid model: A new model that combines many special small models into a single system will provide better performance and flexibility eight. This type of model can be designed to improve the performance of specific projects.

5 Bias Reduction: It can be argued that reducing bias in LLM is very important. Improving training data management and algorithm integrity is important to ensure that returns from the model are not only fair but also unbiased.

See Also: Explore AI Security: Risks, Challenges, Best Practices

Conclusion

As we enter 2024, Large Language Models (LLMs) are set to further transform how we use technology, from enhancing customer support to advancing diagnostics. However, with their potential come important challenges, such as managing biases, combating misinformation, and safeguarding privacy. It's crucial to address these issues to ensure responsible and effective use of these technologies.

The future of LLMs is full of promise, offering new opportunities across various fields. Aspire Softserv can help you navigate this evolving landscape, providing the expertise needed to harness these advancements while maintaining the highest standards of integrity and responsibility for your business.

Transform Your Business with Advanced LLM Solutions