Artificial Intelligence (AI) has transformed industries, from healthcare to education, by enabling machines to process and generate human-like text, answer questions, and perform complex tasks. At the heart of these advancements are language models, which are algorithms trained to understand and produce language. While large language models (LLMs) like GPT-4 or Llama have dominated headlines due to their impressive capabilities, they come with significant drawbacks, such as high computational costs and resource demands. Enter Small Language Models (SLMs)—compact, efficient alternatives that are reshaping how AI is developed, deployed, and accessed. This blog explores the technical foundations of SLMs, their role in making AI more accessible, and their impact on performance across various applications.

What Are Small Language Models?

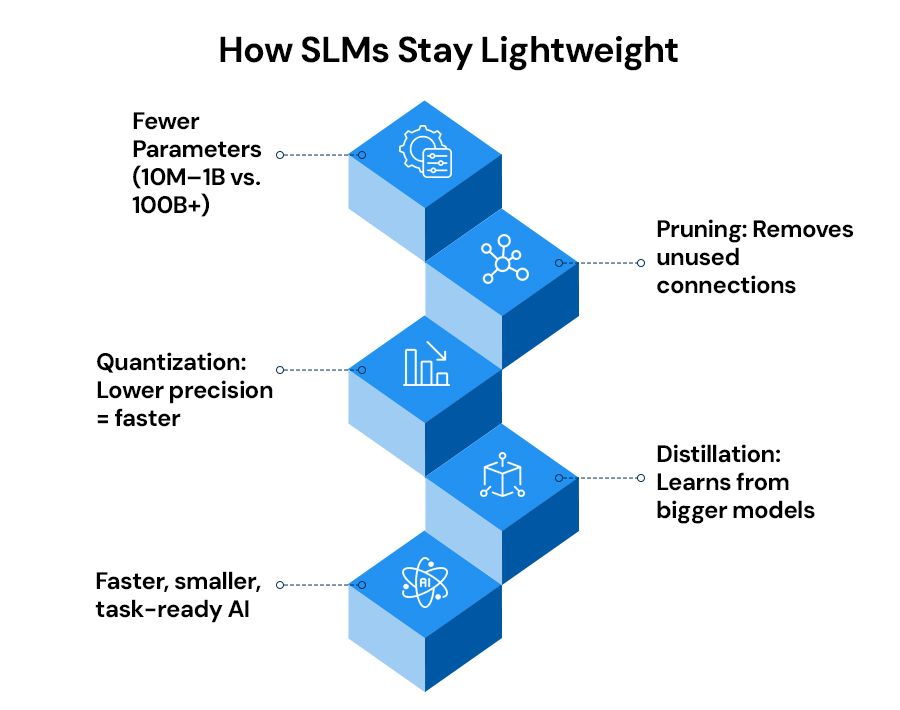

Small Language Models are AI models designed to process and generate natural language with fewer parameters than their larger counterparts. While LLMs often have billions of parameters (e.g., GPT-3 with 175 billion), SLMs typically range from a few million to a few billion parameters. Parameters are the internal variables a model uses to make predictions, and fewer parameters mean less computational power is needed for training and inference.

SLMs are built using similar architectures to LLMs, such as transformers, which rely on layers of interconnected nodes to process input data. However, SLMs are optimized through techniques like pruning, quantization, and knowledge distillation. Pruning removes unnecessary connections within the model, quantization reduces the precision of numerical calculations (e.g., from 32-bit to 8-bit), and knowledge distillation transfers insights from a larger model to a smaller one during training. These methods make SLMs lighter and faster while maintaining much of the functionality of larger models.

Why SLMs Matter for Accessibility

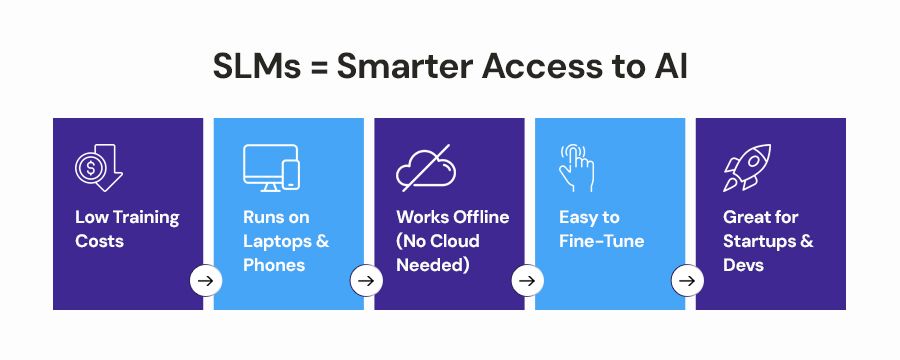

Accessibility in AI refers to the ability of individuals, businesses, and organizations—regardless of their resources—to use and benefit from AI technologies. SLMs are pivotal in this regard because they lower the barriers to entry in three keyways: cost, infrastructure, and usability.

Lower Computational Costs

Training and running LLMs require vast computational resources, often involving clusters of high-end GPUs or TPUs that cost millions of dollars. For example, training a model like GPT-3 can consume energy equivalent to the annual usage of hundreds of households. SLMs, by contrast, require significantly less computing power. A model with 1 billion parameters can often be trained or fine-tuned on a single high-end GPU or even a powerful consumer-grade laptop. This reduced cost makes AI development feasible for startups, small businesses, and academic researchers who lack access to large-scale computing infrastructure.

Reduced Infrastructure Demands

Deploying LLMs typically involves cloud-based servers or specialized hardware, which can be expensive and complex to manage. SLMs, with their smaller footprint, can run on edge devices like smartphones, IoT devices, or low-cost servers. For instance, models like Google’s MobileBERT or Meta AI’s DistilBERT can perform tasks such as text classification or sentiment analysis directly on a mobile device without needing constant internet connectivity. This capability is transformative for applications in remote areas or industries where data privacy is critical, as it allows AI to function offline and locally.

Simplified Usability

SLMs are easier to fine-tune and deploy than LLMs, making them more approachable for developers with limited expertise. Fine-tuning an SLM for a specific task, such as summarizing medical records or generating customer support responses, requires less data and time compared to an LLM. Open-source SLMs, such as Hugging Face’s DistilBERT or Microsoft’s Phi-2, come with detailed documentation and community support, enabling developers to adapt these models to their needs without extensive machine learning knowledge. This democratization of AI tools empowers a broader range of professionals to integrate AI into their workflows.

Performance Advantages of SLMs

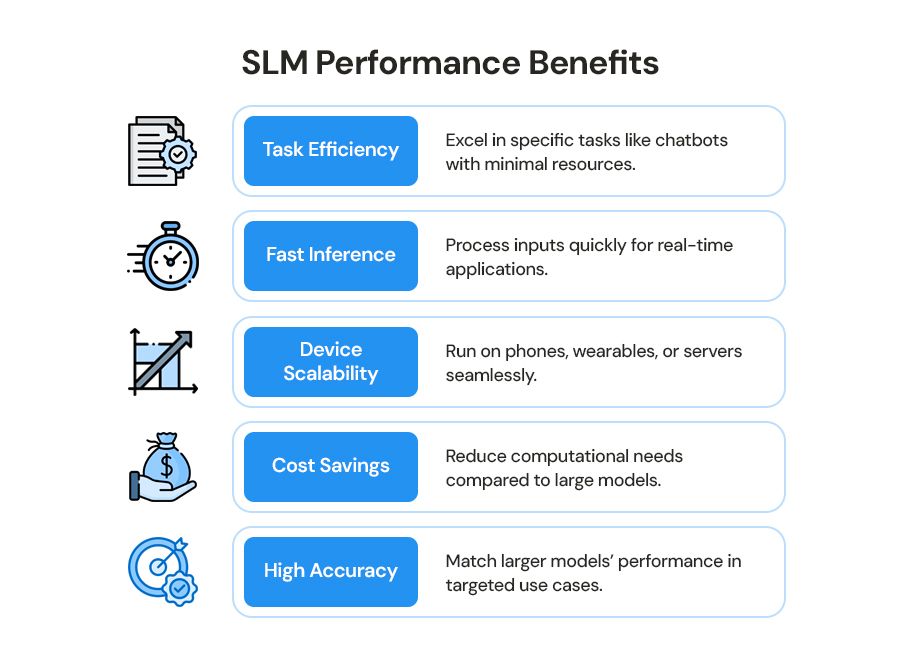

While SLMs are smaller than LLMs, they are not necessarily less capable in practical scenarios. Their performance is often comparable to larger models in specific tasks, and they offer unique advantages in efficiency, speed, and scalability.

Task-Specific Efficiency

SLMs excel in targeted applications where a general-purpose, billion-parameter model is overkill. For example, in customer service chatbots, an SLM can be fine-tuned to handle common queries like order tracking or returns with accuracy comparable to an LLM but at a fraction of the computational cost. Studies have shown that models like DistilBERT achieve 97% of BERT’s performance on benchmark tasks like question answering while using half the parameters. This efficiency makes SLMs ideal for industries with well-defined AI needs, such as legal document analysis or real-time language translation.

Faster Inference Times

Inference—the process of generating predictions or responses—happens much faster with SLMs due to their compact size. For instance, an SLM running on a smartphone can process a user’s voice command in milliseconds, enabling real-time interactions. In contrast, LLMs often require server-side processing, introducing latency that can frustrate users. Faster inference is critical for applications like autonomous vehicles, where split-second decisions are necessary, or in healthcare, where real-time diagnostic tools can save lives.

Scalability Across Devices

The ability to deploy SLMs on a wide range of devices—from cloud servers to wearables—enhances their scalability. For example, an SLM embedded in a smartwatch can monitor a user’s health data and provide personalized feedback without relying on a cloud connection. This scalability also benefits businesses, as they can deploy SLMs across thousands of devices without incurring the prohibitive costs associated with LLMs. In IoT ecosystems, SLMs enable edge devices to collaborate, sharing lightweight models to perform distributed tasks like environmental monitoring or predictive maintenance.

Challenges and Limitations

Despite their advantages, SLMs have limitations that developers and organizations must consider. Their smaller size means they capture less general knowledge compared to LLMs. For instance, an SLM may struggle with complex reasoning tasks, such as generating detailed research papers or understanding nuanced cultural references, where LLMs excel. Additionally, while SLMs are easier to fine-tune, they may require more careful optimization to avoid overfitting, especially when training data is limited.

Another challenge is the trade-off between size and versatility. SLMs are highly effective for specific tasks but lack the broad, multi-domain capabilities of LLMs. Organizations must weigh whether an SLM’s efficiency justifies its narrower scope or if an LLM’s flexibility is worth the added cost. Finally, while open-source SLMs are widely available, proprietary models may still require licensing fees, which could limit accessibility for some users.

Real-World Applications

SLMs are already making a significant impact across industries, demonstrating their value in practical settings. Here are a few examples:

Healthcare: SLMs power portable diagnostic tools that analyze patient data, such as medical imaging or symptom reports, directly on devices like tablets. This is particularly valuable in rural or underserved areas with limited internet access.

Education: Language tutoring apps use SLMs to provide real-time feedback on pronunciation or grammar, running entirely on a student’s smartphone.

Retail: E-commerce platforms deploy SLMs for personalised product recommendations, analysing user behaviour locally to protect privacy and reduce server costs.

IoT and Smart Homes: SLMs enable voice assistants in smart speakers to process commands offline, improving response times and reducing reliance on cloud infrastructure.

The Future of SLMs

The rise of SLMs signals a shift toward more inclusive and practical AI development. As research continues, we can expect SLMs to become even more efficient through advancements in model compression and training techniques. For example, ongoing work in federated learning—where models are trained across distributed devices—could further reduce the resource demands of SLMs, making them accessible to even more users.

Moreover, the open-source community is driving innovation in SLMs, with platforms like Hugging Face and GitHub hosting a growing number of models tailored to specific industries. This collaborative approach ensures that SLMs will continue to evolve, addressing niche use cases while maintaining high performance.

Conclusion

Small Language Models are revolutionizing AI by making it more accessible and efficient without sacrificing performance in many practical applications. Their lower computational costs, reduced infrastructure demands, and simplified usability open the door for small businesses, individual developers, and underserved communities to adopt AI technologies. While they may not match the versatility of larger models, SLMs offer a compelling balance of speed, scalability, and task-specific accuracy. As industries increasingly prioritize efficiency and inclusivity, SLMs are poised to play a central role in the future of AI, proving that bigger isn’t always better.

Boost Speed & Cut Costs with Small AI Models