Small Language Models (SLMs) are transforming artificial intelligence by prioritizing efficiency, accessibility, and practicality. Unlike large language models (LLMs) that demand extensive computational resources, SLMs deliver robust natural language processing (NLP) capabilities with fewer parameters, making them ideal for diverse applications. This blog draws on our four-part series to explore how SLMs are reshaping AI, from their foundational principles to their practical applications, operational benefits, and broader impact on accessibility. By examining their advantages and future potential, we highlight why SLMs are key to a sustainable and inclusive AI ecosystem.

Understanding the Foundation of SLMs

SLMs, as introduced in our first blog, Understanding Small Language Models: A New Era in AI, are compact AI models with millions to a few billion parameters, compared to the billions found in LLMs like GPT-3. Their smaller size reduces the need for high-end hardware, enabling faster training and deployment. Techniques like pruning, quantization, and knowledge distillation allow SLMs to maintain functionality while minimizing resource demands. For example, they can power on-device applications, such as Siri’s offline voice processing, without relying on cloud servers. This efficiency not only lowers costs but also improves privacy by processing data locally, a critical advantage for industries like healthcare and finance.

The shift to SLMs reflects a broader trend toward sustainable AI. By consuming less energy, they address concerns about the environmental impact of large-scale models, making them suitable for organizations with limited resources, such as startups or academic institutions. Their design prioritizes specific tasks, ensuring high performance in targeted applications like customer support chatbots or medical transcription tools.

Exploring Diverse Applications

Our second blog, The Potential of Small Language Models in Modern Applications, outlined how SLMs enable real-time NLP across various sectors. Their lightweight nature makes them perfect for edge computing, where devices like smartphones or IoT gadgets process data locally. For instance, Google Assistant uses SLMs to handle simple commands offline, reducing latency and improving user privacy. In healthcare, SLMs summarize patient records on secure hospital systems, complying with regulations like GDPR. Education benefits from SLMs through apps like Duolingo, which deliver personalized language lessons tailored to individual progress.

A standout feature of SLMs is their ability to support low-resource languages. Unlike LLMs, which require vast datasets, SLMs can be trained on smaller corpora, enabling NLP for languages with limited digital presence, such as Indigenous or African dialects. This fosters digital inclusivity, allowing communities with underdeveloped technological infrastructure to access AI-driven tools like translation or text-to-speech systems. SLMs’ adaptability makes them a versatile solution for both niche and widespread applications.

Optimizing Operations Across Industries

The third blog, How Small Language Models Boost Efficiency Across Industries, detailed how SLMs streamline workflows in sectors like customer support, finance, and retail. By automating repetitive tasks, SLMs reduce human workload and operational costs. For example, Zendesk’s Answer Bot uses SLMs to resolve FAQs by pulling answers from knowledge bases, freeing human agents for complex issues. In finance, American Express employs SLMs to detect fraudulent transactions by analyzing patterns in real time. Retail platforms like Amazon rely on SLMs for personalized product recommendations, using browsing history to suggest relevant items without heavy cloud dependence.

SLMs also excel in legal and manufacturing contexts. Law firms use them to summarize documents or review contracts, as seen with ROSS Intelligence, which aids legal research by retrieving relevant case law. In manufacturing, SLMs optimize supply chains by forecasting demand and detecting defects, as implemented by companies like Siemens. These examples illustrate how SLMs deliver fast, accurate results, making them indispensable for businesses seeking cost-effective AI solutions.

Enhancing Accessibility and Performance

As explored in our fourth blog, The Impact of Small Language Models on AI Accessibility and Performance, SLMs democratize AI by lowering barriers to adoption. Their reduced computational needs allow small businesses, developers, and researchers to build AI solutions without expensive infrastructure. Open-source models like DistilBERT, available through Hugging Face, enable fine-tuning for specific tasks with minimal data and expertise. For instance, a developer can adapt an SLM for legal document analysis or educational tutoring with relative ease, broadening AI’s reach.

Performance-wise, SLMs rival LLMs in task-specific applications. Studies show that models like DistilBERT achieve 97% of BERT’s accuracy on tasks like text classification while using half the parameters. Their faster inference times are critical for real-time applications, such as healthcare diagnostics or autonomous vehicle systems. By running on devices as small as smartwatches, SLMs enable scalable deployment, creating opportunities for innovation in IoT ecosystems and beyond.

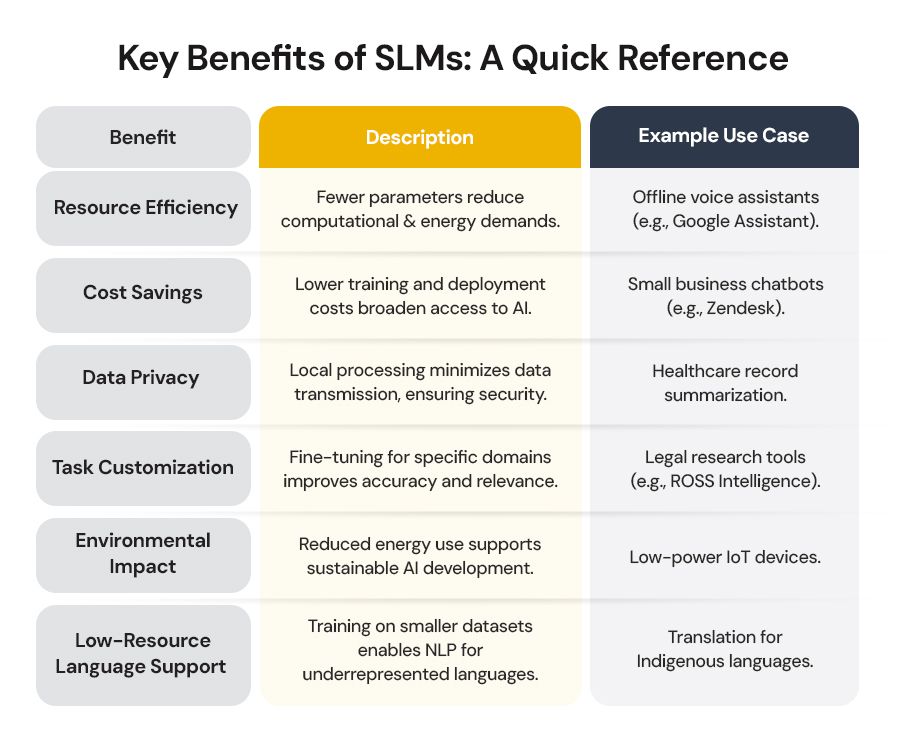

Key Benefits of SLMs: A Quick Reference

The table below summarizes the core advantages of SLMs, drawing from insights across our series, to provide a clear overview for developers and businesses:

This table highlights why SLMs are a practical choice, offering actionable insights for implementing AI solutions.

Looking Ahead: Challenges and Opportunities

SLMs face challenges that require careful consideration. Their smaller size limits their general knowledge compared to LLMs, making them less suited for complex reasoning tasks like generating detailed research papers. Fine-tuning SLMs demands careful optimization to avoid overfitting, particularly with limited data. Additionally, while open-source SLMs are widely available, proprietary models may involve licensing costs, which could restrict access for some users.

Despite these hurdles, the future of SLMs is bright. Advances in model compression, such as federated learning, could further reduce resource demands, enabling deployment in even more constrained environments. The open-source community is driving innovation, with platforms like Hugging Face offering SLMs tailored to specific industries. For example, healthcare-focused SLMs could improve diagnostic accuracy, while education-oriented models could expand access to personalized learning. These developments will broaden SLMs’ impact, making AI more inclusive and practical.

A Vision for Inclusive AI

SLMs are poised to shape a future where AI is accessible to all. Their ability to run on edge devices could enable applications like real-time disaster response systems in areas with limited connectivity or affordable tutoring apps for students in remote regions. In healthcare, portable diagnostic tools powered by SLMs could deliver medical expertise to underserved communities, improving global health outcomes. Sustainability is also a key driver, as SLMs’ lower energy consumption aligns with efforts to reduce carbon emissions, making them ideal for low-power AI initiatives by governments or NGOs.

To fully realize this potential, ethical considerations must be addressed. Developers should prioritize diverse, unbiased training datasets to ensure SLMs serve all communities equitably, particularly for low-resource languages. Collaboration between researchers, policymakers, and industry leaders will be crucial to establish guidelines for responsible SLM deployment, ensuring privacy, fairness, and accessibility.

Conclusion

Small Language Models are redefining AI by balancing efficiency, accessibility, and performance. From their compact design and task-specific capabilities to their ability to streamline operations and broaden access, SLMs are proving that smaller models can deliver significant impact. As we’ve explored in our series, SLMs offer a sustainable and inclusive path forward, enabling businesses, developers, and communities to adopt AI without the barriers of large-scale models. As research and adoption grow, SLMs will continue to drive innovation, creating a future where AI is practical, equitable, and impactful for all.

Smarter AI. Lower Costs. Real Impact.