Imagine a world where you control devices simply by speaking, gesturing, or even thinking—no screens, no buttons, just natural human interaction. This is the promise of Zero-UI, a revolutionary approach to human-computer interaction that is reshaping how we engage with technology. For businesses, this represents both an exciting opportunity and a significant engineering challenge. Companies offering product development services are at the forefront of this transformation, building voice assistants, gesture-controlled systems, and even experimental brain-computer interfaces that could redefine accessibility and convenience.

The shift toward Zero-UI is driven by a fundamental truth: the most intuitive interfaces are often the ones we don't see. Traditional graphical user interfaces (GUIs) have served us well, but they require users to adapt to technology rather than the other way around. Zero-UI flips this dynamic, allowing technology to respond to human behaviour in ways that feel instinctive. Whether it's asking a voice assistant for the weather, waving a hand to skip a song, or using a brain-computer interface to control a prosthetic limb, these interactions eliminate friction and make technology more inclusive.

However, designing effective Zero-UI systems is far from simple. Each modality—voice, gesture, and brain-computer interaction—comes with its own technical complexities, from accurate speech recognition to precise motion tracking and reliable neural signal interpretation. Businesses investing in software product development services must carefully consider factors like usability, privacy, and real-world performance to create solutions that truly enhance the user experience.

In this article, we will explore the current state of Zero-UI technologies, the challenges engineers face in making them work seamlessly, and how businesses can strategically integrate them into their products. By understanding both the potential and the limitations of voice, gesture, and brain-computer interfaces, companies can make informed decisions about where and how to implement these innovations for maximum impact.

Understanding Zero-UI: Beyond the Screen

Zero-UI represents a fundamental shift in how humans interact with machines. Unlike traditional interfaces that rely on physical input devices like keyboards, mice, or touchscreens, Zero-UI systems respond to natural human actions—speech, movement, and even thought. This approach aligns technology more closely with innate human behaviours, reducing the cognitive load required to operate devices and making interactions feel effortless.

The concept of Zero-UI was popularised by designer Golden Krishna, who argued that the best interface is no interface at all. Instead of forcing users to navigate menus or memorise gestures, technology should anticipate needs and respond intuitively. This philosophy is now driving innovation across industries, from consumer electronics to healthcare and automotive systems. Companies providing product development services are increasingly tasked with creating these invisible interfaces, balancing cutting-edge technology with practical usability.

One of the key advantages of Zero-UI is its potential for accessibility. For individuals with physical disabilities, voice commands or gesture controls can provide independence where traditional interfaces fail. Similarly, brain-computer interfaces offer life-changing possibilities for those with limited mobility.

However, realising this potential requires careful engineering to ensure reliability across diverse users and environments.

Key considerations for Zero-UI accessibility include:

Designing for diverse speech patterns and physical abilities

Ensuring systems work reliably in different environments (noisy, dimly lit, etc.)

Providing alternative input methods when Zero-UI isn't practical

As we examine the three primary Zero-UI modalities—voice, gesture, and brain-computer interaction—it becomes clear that each has reached different levels of maturity and faces distinct implementation hurdles. Understanding these differences is crucial for businesses looking to incorporate Zero-UI into their products effectively.

Voice Interfaces: The Most Established Zero-UI Technology

Voice interfaces have become the most visible and widely adopted form of Zero-UI, thanks to virtual assistants like Amazon's Alexa, Apple's Siri, and Google Assistant. These systems have moved beyond simple command recognition to offer increasingly natural conversations, powered by advances in natural language processing (NLP) and machine learning. The success of voice technology in consumer applications has led to its expansion into enterprise environments, where voice-controlled systems are streamlining workflows in industries ranging from healthcare to manufacturing.

The engineering behind voice interfaces involves multiple sophisticated components working in harmony. Automatic speech recognition (ASR) converts spoken words into text, while NLP extracts meaning and intent from that text. The system then generates appropriate responses or actions, whether that's answering a question, controlling a smart home device, or initiating a complex business process. This pipeline must operate nearly instantaneously to maintain the illusion of natural conversation, requiring significant computational resources optimised through software product development services.

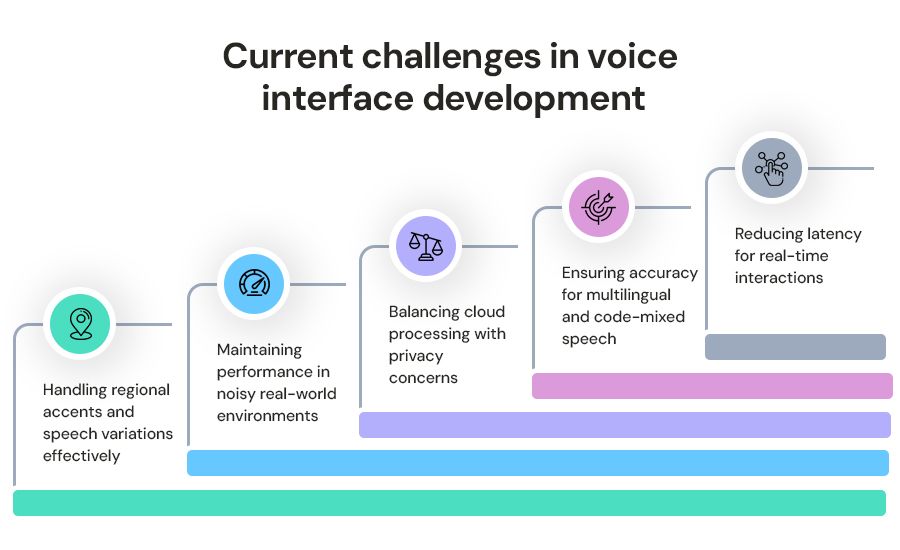

Current challenges in voice interface development:

Despite their maturity, voice interfaces still face significant challenges. Privacy concerns also persist, as always-listening devices raise questions about data collection and security. Engineers must balance functionality with these considerations, implementing techniques like on-device processing and clear consent mechanisms to build trust with users.

Looking ahead, the next generation of voice interfaces will likely incorporate more contextual awareness, remembering previous interactions and adapting to individual speaking styles. Multi modal systems that combine voice with other input methods may also become more common, allowing users to switch seamlessly between speaking and gesturing depending on what feels most natural in a given situation.

Gesture Control: Bridging the Physical and Digital Worlds

Gesture recognition technology creates a direct link between human movement and digital response, eliminating the need for physical controllers in many applications. This form of Zero-UI has found particular success in gaming (Microsoft's Kinect), virtual reality systems, and smart home controls. More recently, automotive manufacturers have begun incorporating gesture controls for infotainment systems, allowing drivers to adjust settings without taking their eyes off the road.

The technical implementation of gesture interfaces varies depending on the use case. Some systems rely on optical sensors and cameras to track hand movements, while others use ultrasonic or radar-based technologies that work in low-light conditions. Machine learning algorithms then interpret these movements as specific commands, requiring extensive training datasets to account for variations in human anatomy and movement patterns. This complexity makes gesture recognition a particularly challenging area for product development services, as even slight miscalibrations can lead to frustrating user experiences.

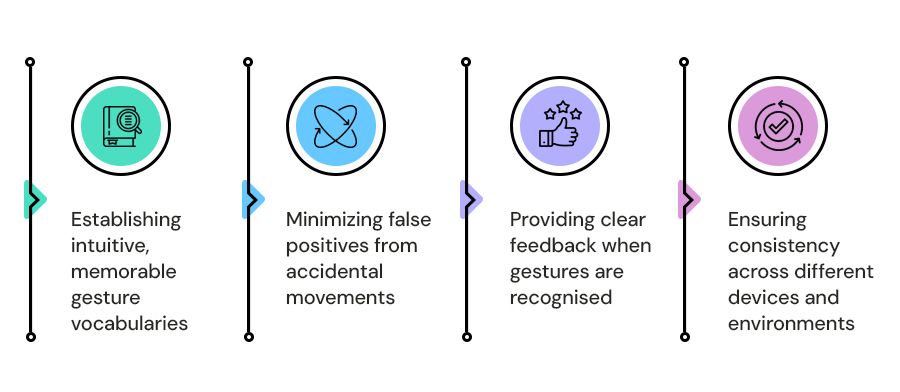

Critical factors in gesture interface design:

One of the primary limitations of current gesture systems is the lack of standardization. Unlike voice interfaces that can leverage common wake words ("Hey Siri," "OK Google"), each gesture-controlled product often implements its own set of movements, forcing users to learn new interactions for different devices. There's also the issue of accidental activation—systems must be sensitive enough to detect intentional gestures while ignoring casual movements that weren't meant as commands.

Future developments in gesture technology may focus on more subtle interactions, such as eye tracking or facial expression recognition, to create even more natural interfaces. The integration of haptic feedback could also enhance the user experience by providing physical confirmation of digital actions, bridging the gap between the virtual and physical worlds.

Brain-Computer Interfaces: The Frontier of Zero-UI

Brain-computer interfaces (BCIs) represent the most advanced and least mature form of Zero-UI, with applications currently focused primarily on medical and research settings. These systems measure electrical activity in the brain (through EEG) or, in more invasive implementations, directly interface with neurons to translate thoughts into commands. While still in early stages, BCIs have shown remarkable potential for restoring communication and mobility to individuals with severe disabilities, and tech companies like Neuralink are working to bring consumer applications to market.

The engineering challenges for BCI systems are substantial. Non-invasive EEG headsets must distinguish meaningful brain signals from background noise, while invasive interfaces require delicate surgical implantation. Both approaches face latency issues—the delay between thought and action—that can make interactions feel unnatural. There are also significant ethical considerations around privacy and cognitive liberty that must be addressed as this technology develops.

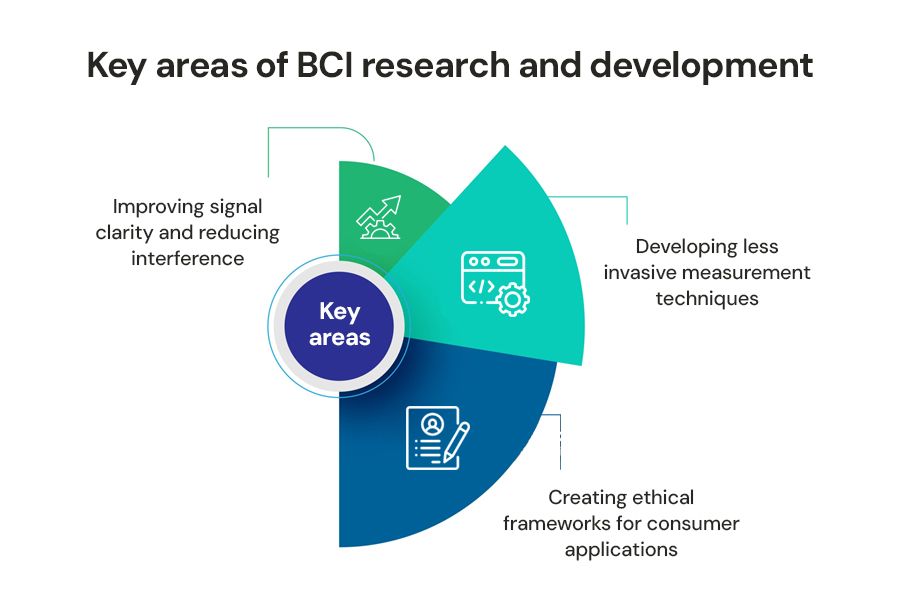

Key areas of BCI research and development:

Despite these hurdles, progress in BCI technology continues at a rapid pace. Recent advances in machine learning have improved signal interpretation, while miniaturisation of hardware has made some systems more practical for everyday use. In the coming years, we may see BCIs move beyond medical applications into areas like gaming, augmented reality, and even workplace productivity tools. However, widespread adoption will depend on overcoming not just technical barriers but also social acceptance—many potential users remain uncomfortable with the idea of technology reading their thoughts.

For businesses considering BCI integration, partnering with specialised software product development services will be essential to navigate this complex and rapidly evolving field. Early adopters should focus on clear value propositions where BCI offers unique advantages over other input methods, such as hands-free control in hazardous environments or enhanced accessibility solutions.

Implementing Zero-UI: Key Considerations for Businesses

Adopting Zero-UI technologies demands strategic planning to ensure seamless integration. Start by identifying use cases where voice, gesture, or brain-computer interfaces (BCI) genuinely enhance usability—such as voice controls in hands-busy environments or gesture interfaces in sterile medical settings. Technical implementation requires specialized hardware and software, rigorous testing, and expertise in human-centered design to ensure reliability across diverse conditions. Partnering with experienced product developers can streamline this process while addressing both functionality and user adaptability.

Privacy and security are critical, as Zero-UI often involves sensitive biometric data. Solutions like local data processing, clear consent mechanisms, and transparent policies help build trust and comply with regulations. Additionally, Zero-UI should complement, not replace, existing interfaces, offering users flexibility through a multimodal approach. Combining voice, gesture, and traditional inputs ensures accessibility and adaptability across different scenarios, enhancing the overall user experience.

The Future of Zero-UI: Where Are We Heading?

Zero-UI technologies are evolving rapidly, with key trends focusing on context-aware interfaces and seamless multimodal interactions. Future systems will anticipate user needs through environmental sensors and personal history, adjusting settings like lighting or navigation without explicit commands. Additionally, interactions will blend voice, gestures, and facial expressions, creating fluid, natural experiences—like starting a task by voice, refining it with a gesture, and confirming with a nod.

Advancements in AI will further personalize Zero-UI, with systems learning user preferences instead of requiring rigid commands. This demands powerful machine learning models and innovations in edge computing. For businesses, staying competitive will mean investing in R&D and collaborating with specialized software development services to master the convergence of hardware, software, and human-centric design.

Conclusion: Embracing the Invisible Interface

The shift to Zero-UI marks a major evolution in human-computer interaction, moving beyond screens to more natural, intuitive interfaces like voice and gesture control. While the potential is vast, success depends on balancing advanced technology with human-centered design. Businesses must recognize this as an emerging reality—not just a futuristic idea—and start exploring these technologies now to stay ahead.

The key lies in thoughtful implementation, prioritizing real user needs over novelty, and partnering with experts to tackle engineering challenges. Companies that master this will create seamless, invisible tech that blends into daily life. The future of interaction isn’t about screens or buttons but about systems that understand and respond to us naturally. The question isn’t if Zero-UI will dominate, but how fast your business can adapt.