What Is Kubernetes?

The Kubernetes open-source software is used for scaling, managing, and installing containerized applications anywhere. It was initially developed by Google and is presently maintained by the Cloud Native Computing Foundation (CNCF), a branch of the Linux Foundation.

In addition to having built-in commands for deploying apps, rolling out changes to your apps, scaling your apps up and down according to your changing needs, monitoring your apps, and more, Kubernetes automates operational tasks of container management, making application management easier.

Why Do We Use Kubernetes?

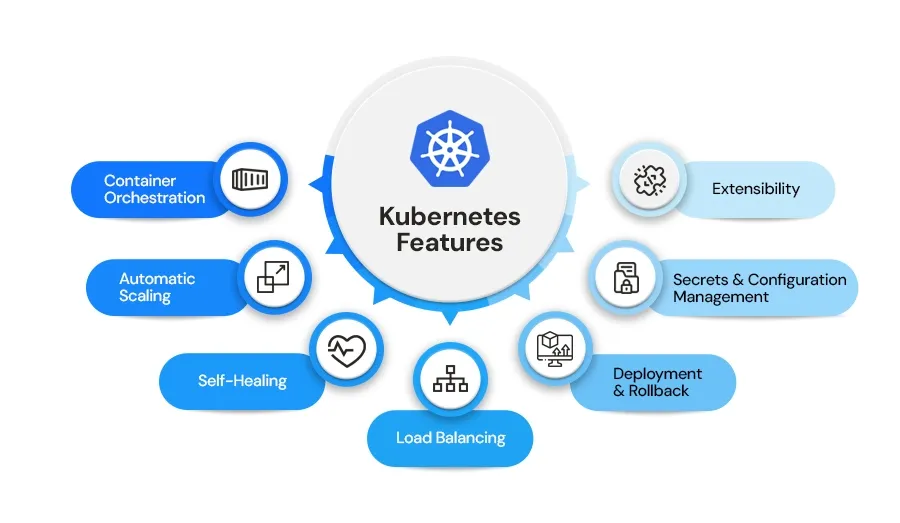

Key Features of Kubernetes

Container orchestration: The deployment, scaling, and operation of containerized applications may be automated and managed with the use of Kubernetes.

Automatic Scaling: This feature allows your application to be scaled up or down automatically in according to demand, saving your money during slow periods and guaranteeing you have enough resources to manage traffic rises.

Self-healing: Kubernetes identifies failing containers and brings them back up. That's why it has self-healing functionality. If a container fails, Kubernetes automatically brings it back up.

Load Balancing: Kubernetes supports load balancing. Kubernetes splits up the traffic according to the requests.

Deployment and rollback: Kubernetes has better support for deployment and rollback. With Kubernetes, deployment has become very simple. Also, we can update applications without any downtime. If there is any failure in the current version, we can also roll back to the previous version of the application.

Secrets and configuration management: Now we can create configuration and secret files using Kubernetes. In the secret file, we can store confidential information, and in the config file, we can store the configuration of our environment variables.

Extensibility: Kubernetes provides connections for different tools like monitoring and CI/CD pipelines. It also provides support for extensions and plugins to increase application functionality.

Advantages

Scalability: Kubernetes helps applications grow or shrink as needed by adding or removing copies of Pods depending on how many resources they're using.

High Availability: Kubernetes makes sure your apps stay up and running by automatically copying them and fixing any problems that come up.

Continuous Deployment: Kubernetes works smoothly with continuous integration and continuous deployment setups, making it easier to automate testing and deploying your apps.

Multi-Cloud Deployment: Since Kubernetes is built for the cloud, you can install and run your apps either within your own network or across multiple cloud services.

Microservices Architecture: Kubernetes is great for using microservices because it helps manage complicated, separate systems on one platform.

Understanding of the Kubernetes Component

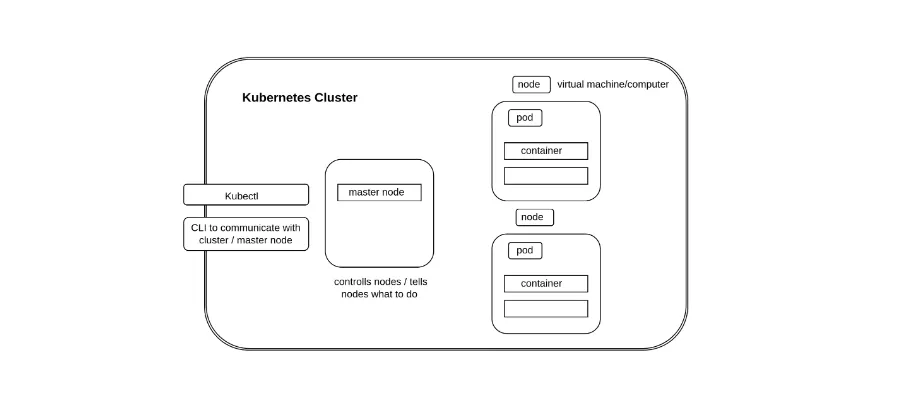

To gain a better understanding of the Kubernetes component, let's look at the diagram below.

Kubectl : Kubectl is a CLI utility for interacting with the master node or control plane.

Master node/control plane: Cluster management is the responsibility of the Control Plane. All of the cluster's operations, including scheduling apps, maintaining them in the appropriate condition, scaling them, and releasing updates, are managed by the Control Plane.

Node: In a Kubernetes cluster, a node is a virtual machine or a physical computer that performs worker functions.

Pod: A pod is a collection of one or more containers and is the smallest unit.

Tools for Kubernetes

You can use a variety of technologies to build up a local Kubernetes cluster and run Kubernetes locally. Here are some popular choices:

1. Minikube: Minikube is a good starting tool for understanding the Kubernetes concept. A local Kubernetes cluster can be established by using Miniqube.With Miniqube, a single-node cluster can be created.

2. Docker Desktop: You may run Kubernetes locally by enabling the Kubernetes option in Docker Desktop. Using Docker containers, it offers a seamless development, testing, and deployment process for Kubernetes applications.

3. Kind: Kind is a tool that runs local Kubernetes clusters using Docker container "nodes". It is appropriate for deployment and testing scenarios as it enables the creation of multi-node Kubernetes clusters on a single server. type is lightweight and easy to use, and it works with Kubernetes features.

4. k3d: k3d, which makes use of lightweight Kubernetes distribution K3s, is an additional method for administering Kubernetes clusters locally with Docker. It provides a more controllable solution than full Kubernetes distributions and facilitates the establishment of Kubernetes clusters locally. K3d is easy to install and supports features like multi-node clusters and bespoke settings.

5. MicroK8s: MicroK8s is a thin-client Kubernetes distribution designed with edge deployments, CI/CD, IoT, and developer workstations in mind. It supports features like add-ons, clustering, and snap integration and offers a complete Kubernetes experience on a single system. MicroK8s is appropriate for both development and production use cases and is simple to set up and maintain.

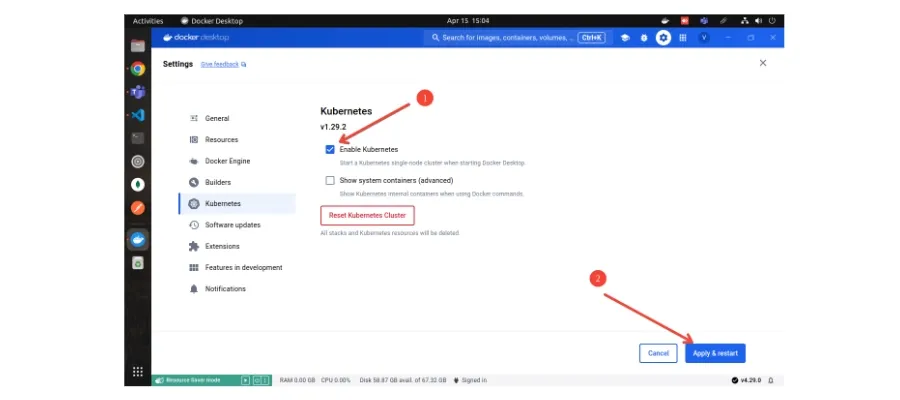

In this guide, we'll use Docker Desktop to create a Kubernetes cluster easily. Docker Desktop makes it simple to set up and manage containers.

Let’s Understand Some Common Kubernetes Terminology and Why We Use:

Deployment File: The way your application is deployed and managed within the cluster is specified in the deployment file in Kubernetes. It provides information on the number of replicas, update mechanism, resource limitations, and container image, among other things.

Why Use Deployment Files: Using deployment files allows you to automate Kubernetes application scalability and deployment. You may simply duplicate and maintain your application across various environments without the need for manual intervention by specifying the intended state of your application in a deployment file.

Service File: In Kubernetes, a service file specifies how your application may be accessible both from outside and within the cluster. In order to route traffic to the proper pods, it provides information on the kind of service (ClusterIP, NodePort, LoadBalancer), ports, and selectors.

Why Use Service Files?: Service files allow your application's various components to communicate with one another within the Kubernetes cluster. If the underlying infrastructure or pod IP addresses change, they offer a reliable endpoint for gaining access to your application. You may also make your application accessible to other users or services, such as web browsers or API clients, by using service files.

Secret File: In Kubernetes, a Secret is an object used to store sensitive information, such as passwords, tokens, or other confidential data.Secrets are stored in the cluster and can be referenced by pods or other resources to access the sensitive data securely.Secrets are base64-encoded by default, but they can also be encrypted at rest for additional security.Common use cases for Secrets include storing database passwords, API tokens, SSL certificates, and SSH keys.

Why Use Secret File: Secrets provide a secure way to store and manage sensitive information within Kubernetes. They allow you to decouple sensitive data from application code and configuration, reducing the risk of exposure. Secrets can be mounted as volumes or exposed as environment variables to pass sensitive data to pods securely.

ConfigMap File: A ConfigMap in Kubernetes is an object used to store non-sensitive configuration data, such as environment variables, configuration files, or command-line arguments.ConfigMaps are stored in the cluster and can be referenced by pods or other resources to inject configuration data at runtime. Common use cases for ConfigMaps include storing application configuration, environment variables, and application settings that may vary between environments.

Why Use ConfigMaps File: ConfigMaps provides a centralized and flexible way to manage configuration data within Kubernetes. Hey allows you to separate configuration from application code, making it easier to update and manage configuration settings independently of the application.ConfigMaps can be mounted as volumes or exposed as environment variables to inject configuration data into pods, enabling dynamic configuration updates without redeploying the application.

Example:

Prerequisite:

Docker

Docker Desktop

Kubectl

Step-1: First enable Kubernetes cluster in docker desktop just like below

If you prefer not to use Docker Desktop, you can download Minikube directly from the official Kubernetes website to set up a Kubernetes cluster.

Step-2: Create node project and make a simple app.js file

const express = require('express');

const app = express();

app.get('/', (req, res) => {

res.send(`

<h1>Let's learn kubernetes together.</h1>

`);

});

app.get('/error', (req, res) => {

throw new Error("error occcured")

});

app.listen(5000); Step-3: Create docker file and docker ignore file

Dockerfile

FROM node:16-alpine

WORKDIR /app

COPY package.json .

RUN npm install

COPY . .

EXPOSE 5000

CMD [ "npm", "start" ]Dockerignore file

node_modules

Dockerfile Step-4: Create docker image

docker image build -t your_username/image_name:tag Step-5: Push the image in the docker hub

docker login

docker push your_username/image_name:tag Step-6: Make deployment file

apiVersion: apps/v1

kind: Deployment

metadata:

name: node-app-deployment

spec:

replicas: 3

selector:

matchLabels:

name: node-app

template:

metadata:

labels:

name: node-app

spec:

containers:

- name: node-app-container

image: image_name

ports:

- containerPort: 5000Step-7: Make a service file

apiVersion: v1

kind: Service

metadata:

name: node-app-service

spec:

selector:

name: node-app

type: LoadBalancer

ports:

- port: 7000

targetPort: 5000 Step-8: Run deployment & service file

kubectl apply -f "deployment_file.yaml"

kubectl apply -f "service_file.yaml" Step-9: go to the browser & type localhost:7000

Your node application is running on the 7000 ports.

See Also: Impact of AI and ML on the Future of E-commerce

Conclusion:

Kubernetes represents a powerful tool for managing containerized applications, offering scalability, reliability, and flexibility in modern cloud-native environments. Through this guide, we've explored the fundamentals of Kubernetes, including its architecture, key components like pods, deployments, and services, as well as advanced topics such as secrets and ConfigMaps.

Ready to bring your idea to life? Schedule a consultation with our expert team today!