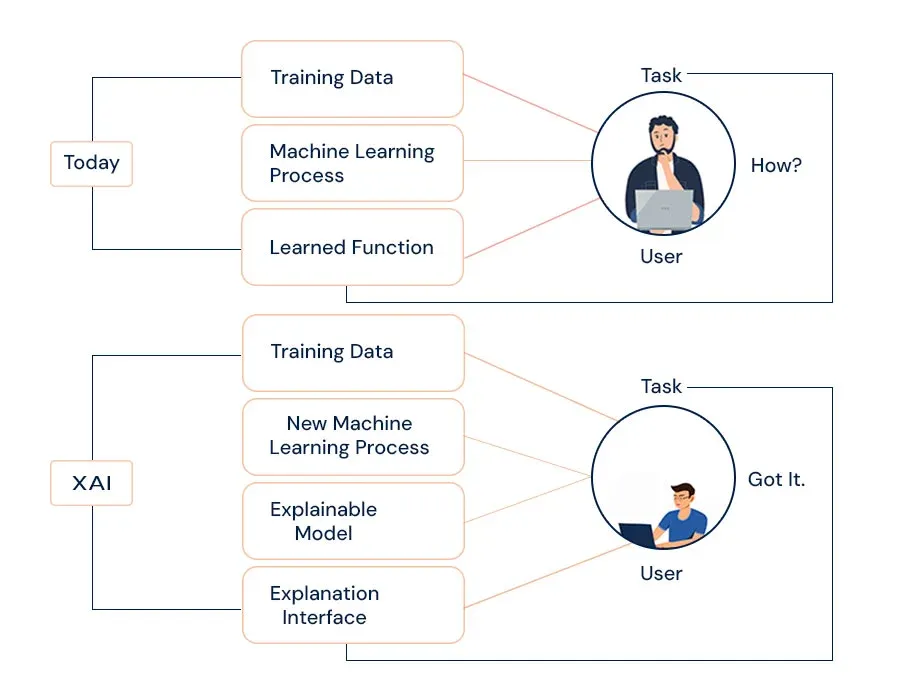

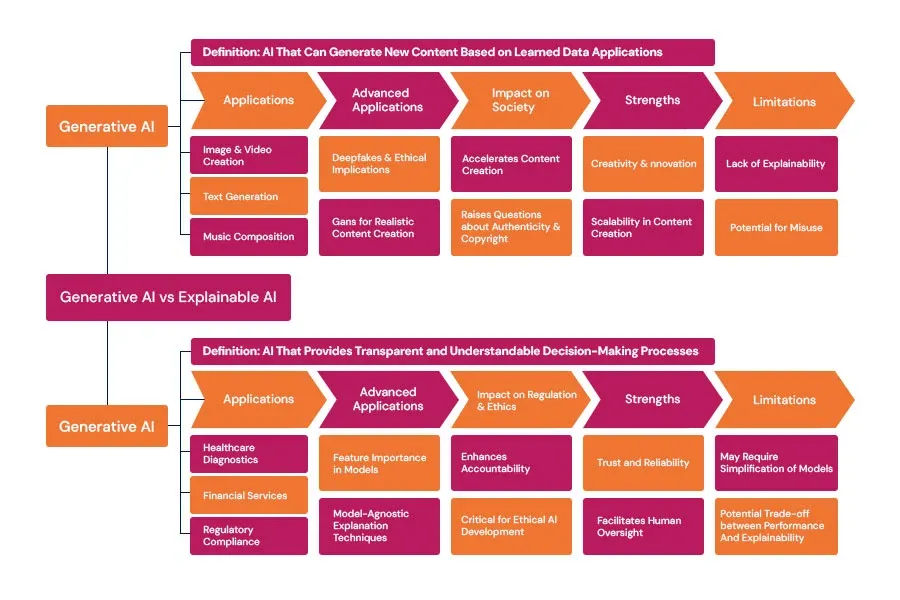

Explainable AI has been among the most critical developments in this fast-changing revolution of Artificial Intelligence. This demand for transparency and understanding about how these AI systems come up with their decisions has grown so much since AI systems are being integrated into our lives—from healthcare diagnoses to autonomous vehicles.

AI development services can help you solve problems more effectively and make better decisions. Traditional AI models, like deep neural networks, often work like black boxes. But AI experts can make these models more transparent. By providing explainable AI, they can help you understand how AI systems reach their conclusions, reducing worries about bias, mistakes, and trust issues.

One must be sure that the organization is thoroughly knowledgeable about AI decision-making processes and the monitoring and accountability of AI rather than blindly trusting it. Explainable AI thus will deliver knowledge of machine learning algorithms, deep learning, and neural networks to humans, explaining them.

What is Explainable AI?

It can help organizations access AI technology at the baseline and change power with the help of descriptive AI and descriptive machine learning. This means that explaining AI will help end users believe that AI is making the right decision, thus improving the user experience of the product or service. When does an AI system give you enough confidence in a decision you can trust?

Why Explainable AI (XAI)?

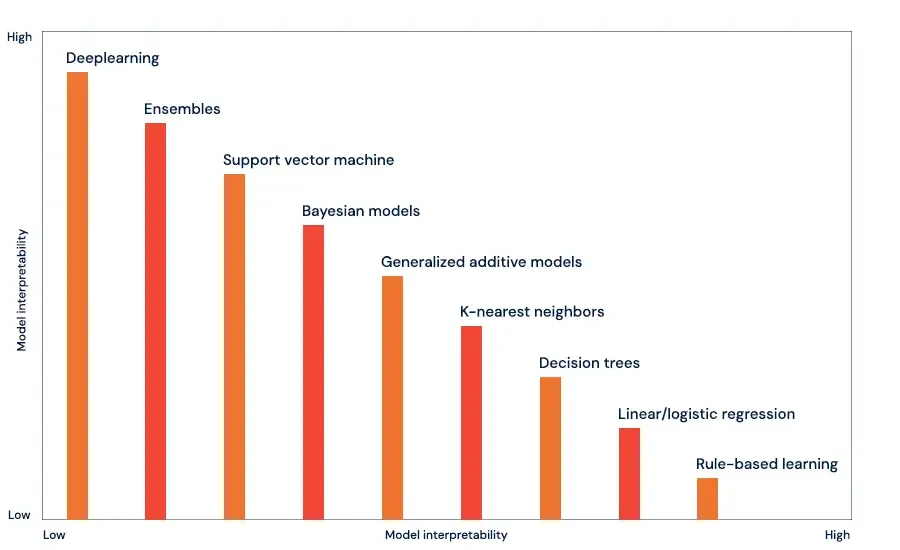

Machine Learning models are often perceived to be black boxes that are impossible to interpret. Neural networks used in Deep Learning are among the most challenging for a human to decipher. Bias, often based on race, gender, age, or location, has always been a significant risk in training AI models.

In addition, the performance of AI models can drift or degrade because the production data is different from the training data. It is, therefore, important to constantly monitor models and manage them to promote explainability AI while measuring business impact from such algorithms.

Explainable AI promotes end-user trust in model auditability and productive use of AI. It mitigates compliance, legal, security, and reputational risks of AI.

Explainable artificial intelligence is among the key requirements that must be met to implement Responsible AI.it is a methodology for the large-scale implementation of AI methods in real organizations, ensuring fairness, model explainability, and accountability. Organizations should, therefore, embed ethical principles into AI applications and processes by building AI systems based on trust and transparency to help in the responsible adoption of AI.

What is the Importance of Explainable AI?

Minimize Bias:

Algorithms that follow Artificial Intelligence mirror the bias that has been updated in them through the data on which they have been trained. XAI techniques are also helpful in reducing bias and increasing model performance. XAI techniques reveal the inner workings of the algorithm and thus help improve the model's performance by tuning the parameters or updating techniques.

Feature Importance

It can describe features/variables that have the most influence on the prediction model. This helps in knowing exactly which factors have the most impact on some decisions.

LIME

Locally Interpretable Model Independent Description. This is a method for locally predicting a machine learning model decision. It creates simple, interpretable models locally for describing predictions about a particular situation.

SHAP

SHAP provides one way of fairly distributing all predictions' contributions. This describes how unique features affect the pattern of the output.

Attention Mechanisms

Attention mechanisms in neural network-based approaches, amongst others, differentially put emphases on components of the input information and offer insights into which functions the model has specialized to make a specific prediction.

Anchors

Anchors are interpretable, minimum rule-based reasons for character predictions. They constrain what must be valid for a consistent prediction.

Toos of Explainable AI: -

Different tools and libraries have so far been developed to aid in Explainable AI, thereby giving users an insight into how machine learning models make decisions. Some of the remarkable Explainable AI tools in the domain are:

SHAP (Shapley Additive explanations)

A Python package for explaining the output of any machine learning model using concepts from game theory is called Shapley values. It provides a unified measure of feature importance.

LIME (Locally Interpretable Model-agnostic Explanations)

A Python library generates locally faithful explanations for the predictions of machine learning models by perturbing input data and observing changes in the output from the model.

DLEX

An R Package Offering a Diversity of Tools for Describing How Complex Machine Learning Models Behave, Making Interpretation and Visualization Techniques Available to the User This package makes plots of variable importance and interpretability techniques available at a model level.

ELI5

ELI5 is a Python package that allows users to explain their predictions using their machine-learning models. It also supports various models, among which are explanations at both global and local levels.

InterpretML

InterpretML is an open-source Python library by Microsoft Research aimed at interpreting machine learning models. It contains procedures for explaining model behavior and feature importance, with examples of how to build interpretable models.

Use Cases for Explainable AI (XAI)

Several examples and case studies on descriptive AI would bring out the benefits and challenges of using this approach. Some examples of how AI could be explained in different applications include:

AI-defined techniques in medical imaging further help to understand the most important and valuable features in diagnosing diseases and disorders.

For instance, descriptive methods of AI have been applied to identify and visualize the most important features in a cancer diagnosis and give insight into predictor factors that could bring positive or negative outcomes.

Descriptive AI techniques can learn what information in translation and analysis is most important and valuable.

For example, AI translation techniques can be used to test sentiment distribution and determine the most prominent or frequently occurring words and phrases to give insight into the most relevant excellent or wrong predictions.

Artificial intelligence methods can further infer what features have the most significant relevance and utility to image recognition and classification. For instance, AI interpretation techniques could be leveraged to find places in the images of most considerable importance for classifying objects, providing insight into what is most relevant about a particular product.

See Also: Generative AI vs Predictive AI: Unravelling the Future of AI

Conclusion

The rise of XAI reflects the shift in focus on the field of Intelligence. AI is becoming part of society, and building trust and accountability with technological advancement has become very important. XAI is a better look not only at how AI works but also at how AI helps to be responsible and ethical. Opening the black box, it goes on to open a path toward a future where intelligent machines are no longer capable but depend upon human partners and other civilizations.

Schedule a Free Call to Discuss Your AI Needs